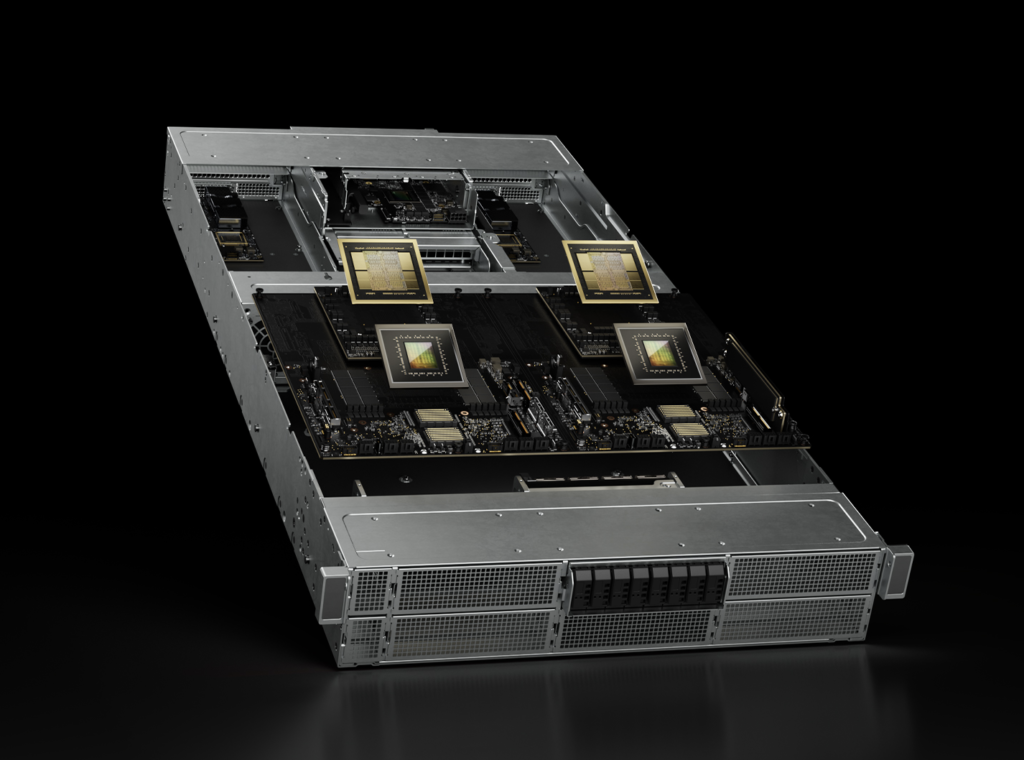

The NVIDIA GB200 stands out significantly from other AI accelerators in the market due to its unique architecture and performance capabilities. Here’s a breakdown of its key differentiators:

1. Unprecedented Performance:

- Massive Compute Power: GB200 delivers significantly higher performance compared to its predecessor, the H100, particularly in large language model (LLM) training and inference. This is attributed to the integration of two powerful B200 GPUs and the advanced NVLink-C2C interconnect.

- Multi-Modal Excellence: GB200 excels in handling complex, multi-modal tasks that involve processing various types of data like text, images, and video simultaneously.

2. Integrated Design:

- CPU-GPU Synergy: Unlike many other accelerators that primarily focus on GPU power, GB200 integrates a powerful Grace CPU alongside the GPUs. This integrated approach enhances overall system efficiency and flexibility.

- Simplified System Architecture: The integrated design simplifies system design and reduces complexity for developers and system integrators.

3. Advanced Interconnect:

- NVLink-C2C: The high-bandwidth NVLink-C2C interconnect enables lightning-fast data transfer between the CPU and GPUs, minimizing bottlenecks and maximizing performance. This is crucial for handling the massive datasets and complex computations involved in modern AI workloads.

4. Focus on Large Language Models:

- LLM Optimization: GB200 is specifically designed to accelerate the training and inference of large language models, which are at the forefront of AI research and development.

Key Competitors and Comparisons:

- AMD MI300X: AMD’s flagship AI accelerator also features an integrated CPU-GPU design. However, the specific architecture and performance characteristics differ significantly.

- Google TPU: Google’s Tensor Processing Units are known for their high performance in specific AI workloads, but they may not offer the same level of versatility and general-purpose computing capabilities as the GB200.

- Intel Xeon Scalable Processors with AI Acceleration: Intel offers AI acceleration capabilities within its Xeon processors, but these may not match the dedicated performance of specialized AI accelerators like GB200.

In Summary:

The NVIDIA GB200 stands out from the competition due to its unparalleled performance, integrated design, and specific focus on large language models. It represents a significant leap forward in AI hardware and is poised to play a crucial role in driving the next wave of AI innovation.

Please note: This is a general overview, and specific performance comparisons may vary depending on the workload and application.

I hope this comparison provides you with a better understanding of how the GB200 differentiates itself from other AI accelerators in the market.